Arguably one of the most interesting and potentially influential bits of emerging tech around right now is the one-two punch of AI and machine learning.

The possibilities are, quite literally, endless in the games space. Want to improve the resolution of images and textures? Nvidia's research has you covered. Don't like cleaning up data from a motion capture session? Bam, Ubisoft says it has trained a machine to do what would take a human four hours in four minutes. And then there's Bossa Studios and Valve vet Chet Faliszek who reckon they can use AI to make video game narrative more dynamic and less scripted.

But - in my opinion - one of the more interesting applications of this tech bundle is what SpiritAI is up to. Founded by IBM vet Steve Andre, who worked on that company's Watson AI programme, this venture initially looked to bring this tech to the games space. Along the line, he met indie developer Dr Mitu Khandaker, now Spirit's CCO, and the duo decided to use this Ai tech to improve interactions with NPCs and conversational characters in games.

This was in a post-GamerGate world, with Khandaker and co realising that what Spirit was working on - recognising what is being said and being able to provide a response - had potentially far more important applications.

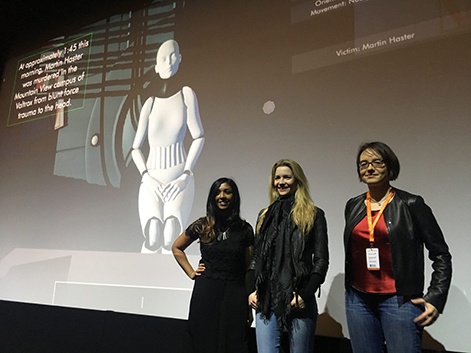

"We realised that if we have these AI systems which are all about really understanding players and what they are saying in context, what about trying to tackle online harassment," Khandaker (pictured, above right) explains.

"This was about a year into GamerGate so this is something I've been thinking about and advocating for a lot. The team let it brew from there. We had these problems that we were really passionate about working on."

All conversation is about consent. A bad word is not a bad word if it is being consented to between friends. The same bad word being applied from a stranger is not consented to, so that's problematicDr Mitu Khandaker

The SpiritAI business is split across two products. Character Engine focuses on the initial focus of bringing good AI to video games to make more believable characters - more on that later - while Ally is what is tackling online harassment.

"GamerGate was probably that crucial moment where we all realised that what we were doing wasn't working," says business development lead Peter Alau, speaking about traditional moderation techniques.

"The common practice right now is word redaction, which is a temporary solution. It says 'find this particular word or regular expressions, and hide them, redact them, mask them, later them, whatever', and then change ratings on people or something along that line. These are non-scalable methods that require a lot of customer service handholding and it doesn't actually tackle the real issue, which is what is the intent of the person's conversation. We can be smacktalking each other with the foulest language and as long as it only affects the two of us, as old friends, it doesn't really matter. It would still trigger off the same issues and that's the starting point of where Ally came in - what do people mean and what are they trying to say."

Khandaker adds: "All conversation is about consent. Consent is really what we look for with Ally. A bad word is not a bad word if it is being consented to between friends. The same bad word being applied from a stranger is not consented to, so that's problematic. Also, keyword redaction doesn't really work in terms of online hate mobs and the realities of them. They will change the language they use to specifically try and lampshade what they are saying and make it harder to pick up on. What we need is systems that can smartly analyse patterns and the history of interactions between people which means we understand the context really well, which you can't do if you just have a rule for keywords."

As well as handling harassment, Alau says that Ally can help companies have a better understanding of what their communities really think about them. Right now, it's very easy for a vocal minority to dominate the narrative with their point of view which is something this product will help with in the future.

"There are three sciences at work here: natural language understanding, classification and processing," he explains.

"When you have those working together along with the machine learning language that takes everything you've learnt so far and improves it over time and with more data, we can more precisely target the worst of the worst and conversely the best of the best. Ally is effectively a customer intelligence tool disguised as an anti-toxicity tool that can do a full analysis of the wide sentiment of what everybody in any online space is feeling. You have a group of people who are really upset and that group is really small but because of multiple accounts and devices, they look massive - then you have a group of people that's massively happy who say absolutely nothing and that's 90 per cent of your audience.

"What we are trying to do is give people a tool that gets them a much clearer understanding of their customer interactions. Obviously, they will highlight the worst and get rid of them or just try to convert them. Because we understand what they are saying and what the intention is and what the reactions are. We have a better chance of getting the best people promoted and the worst people demoted."

In addition to giving an insight into the motivations and sentiments of a community, SpiritAi also has its Character Engine project which is designed to make, as Khandaker puts it, "compelling conversational characters".

"Right now, yes, it's a problem that if you really try to solve you could with the best programmers and so on, but then you wouldn't have the expertise of all the folks with all the years of experience in interactive fiction and things like that that we've got on our team," she says.

"We really just want to make it easy for people who can write and understand how to write procedurally to be able to just create this narrative possibility space for characters."

Come find out about the future of the PC games market at PC Connects London 2019. AI and machine learning are just two of the emerging technologies that we will be looking into at the January 21st and 22nd event.

Tickets are available to buy right here. One ticket gives you access to not just this event, but also Pocket Gamer Connects and Blockchain Gamer Connects.